Reframed a low-adoption private beta into a scalable, monetizable agent platform ahead of public launch.

Scope

Defined system architecture and UX patterns

Built cross-org AI design system and patterns

Aligned strategy and delivery with ML engineers, PMs, legal, and business stakeholders

Enabled internal product teams and unlocked dependencies

Impact

Enabled new usage-based monetization stream

800+ customer-built agents within 4 months

CSAT 4.7 out of 5

Established platform powering all AI products

Influenced 2025/26 AI product roadmap

Context

A private beta with low traction

Discovery

Understanding what blocked us before going public

🔥

Backend driven UX → high friction to value

The UX mirrored backend abstractions rather than user goals, requiring users to understand API concepts and write effective prompts before seeing meaningful value.

🔥

Lack of observability → low trust

Users had zero visibility into how agents behaved in the wild, making it difficult to understand outcomes, debug issues, or trust the system.

🔥

User flexibility vs. Subscription model

User needs for flexibility (e.g. LLM choice) conflicted with a subscription-based model and LLM cost structure, limiting adoption and making scale economically unsustainable.

A big decision

The pivot decision: start from zero

The discovery work made it clear that the core blockers were structural rather than incremental. They constrained trust, adoption, and scalable growth in ways that incremental UX improvements could not realistically address.

Partnering with PM and engineering, we faced a hard decision: continue patching a product that struggled to scale, or reset the foundation around a clearer user mental model and usage-based growth.

✅ Rebuild 0→1

8 weeks to MVP

Scalable foundation for entire AI suite

Enable a monetization model tied directly to API usage

Support both internal and external AI products with shared infrastructure.

❌ Fix existing product

Product limited to RAG only

Months of incremental improvements

Revenue ceiling remains

Limited UX improvement opportunities tied to API design

Approach #1

Building user empathy through vision hero story

The team was highly technical but lacked a shared understanding of who we were building for. To align on user value before committing to architecture, I led a cross-functional storyboard workshop with PM, engineering, and ML.

Key insight

The team was thinking about API endpoints and backend objects. Users were thinking about business outcomes and capabilities.

Result

This shifted our approach from backend-driven structure (Config → Tools → Deploy) to outcome-driven design (Goal → Capabilities → Test).

Approach #2

User goal driven API design

Before any technical implementation, I designed the complete user journey and information architecture. This became the baseline for API schema design.

1

Defined new information architecture & user mental model

2

Co-created the API schema with engineers

3

Backend implementations + UX work in parallel

Result

Clean backend-to-frontend mapping. This required upfront alignment across teams, but reduced long-term rework and prevented the UX from drifting back toward backend-driven abstractions.

Core platform features

Building a platform that can scale across products and teams

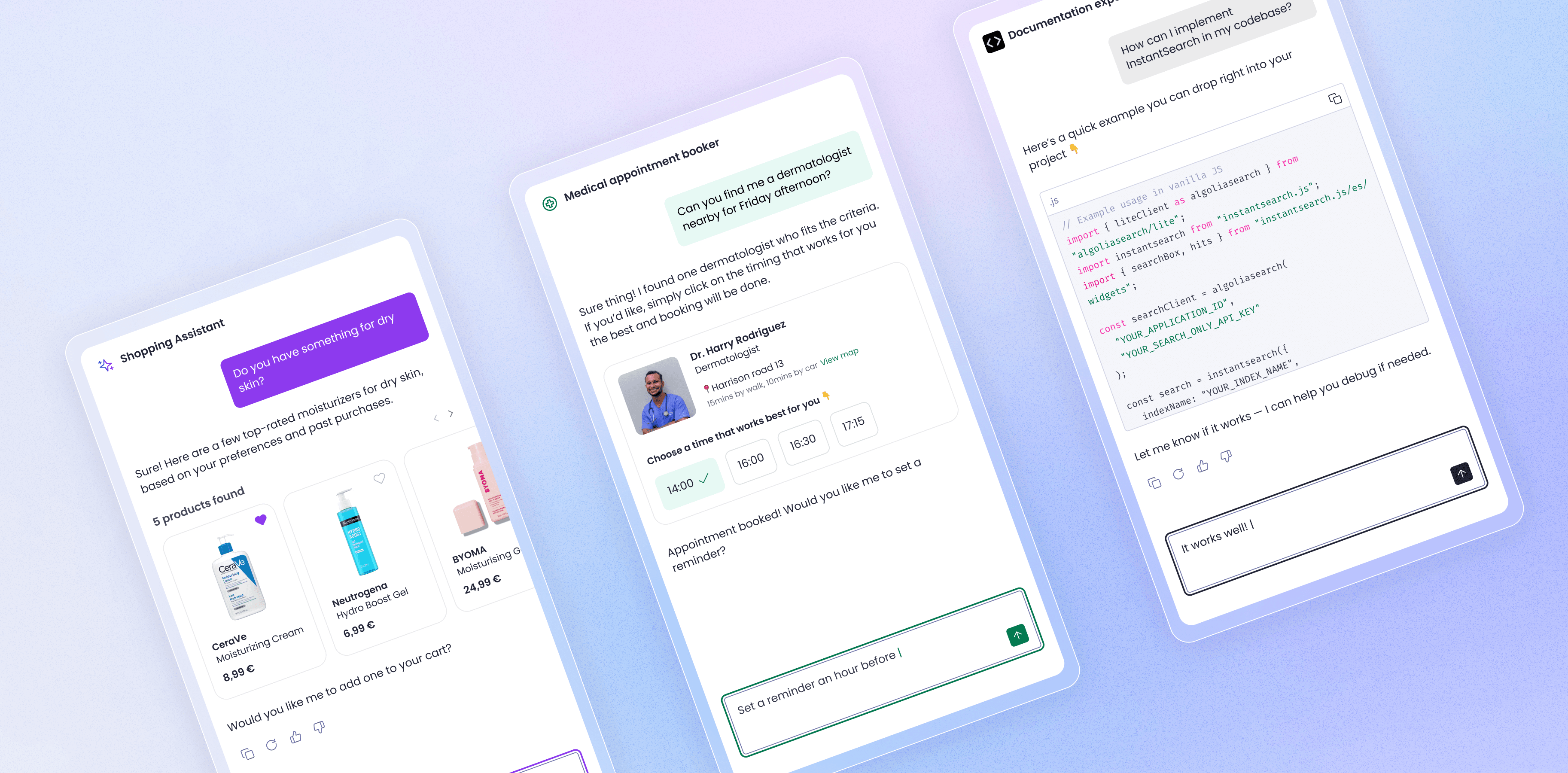

Agent Studio was designed not just for individual builders, but as infrastructure that other teams and external developers could build upon.

1️⃣ Lower entry barrier and make testing easy

Problem

Blank canvas paralysis. Users had to write instructions, config tools and connect to their own LLM to start testing and see value.

Solution

Pre-built templates with optimized prompts and tools pre-selected. Users can start from a template and test with free LLM API access.

2️⃣ Configure and test side-by-side

Problem

AI configuration felt abstract. Users couldn't see how changes affected agent behavior, making iteration slow and frustrating.

Solution

Split-screen interface: configure instructions, tools, and memory on the left; instantly validate agent responses on the right. This became the defining interaction pattern of Agent Studio.

Trade-off

Intentionally excluded advanced technical controls, prioritizing clarity and speed for business users. This limited early flexibility for technical users but it lets us to introduce complexity progressively based on real usage.

3️⃣ Ability to monitor your agent and iterate

Problem

Lack of observability made it difficult to trust, debug and iterate on agent behavior.

Solution

Built-in monitoring dashboard showing key health metrics such as response time, search calls, and user engagement trends. Users can also review each conversations in case they need to investigate deeper.

4️⃣ Agent memory layer

Problem

Agent lacked context. It was like chatting with someone forgets about you each time.

Solution

Exposed memory as first-class feature. Users control retention windows, see what was remembered, and understand why agents respond as they do.

5️⃣ Tighter ecosystem integration → direct revenue impact

Problem

Agent Studio was isolated from Algolia's core products.

Solution

4 months post launch

Early adoption validates platform foundation

800+ agents created

4.7/5 CSAT

100K+ API calls

Adopted by internal teams, powering AI Assistant and AI product experiences

Generated usage-based revenue through search API calls

Established platform layer enabling ecosystem integrations

Learning and iterations

Building trust at scale

Early adoption validated Agent Studio as a powerful environment for rapid prototyping and testing. As more teams explore production use cases, the next phase of work focuses on enabling trust, safety, and governance at scale.

🚀

User controlled guardrails for production readiness

Designing safety controls that set boundaries, apply constraints, and put safeguards in place, helping users trust agents in real-world scenarios.

🧩

Tool adoption needed clearer value communication

Adoption of Algolia Search tool has been lower than expected. We’re improving the value communication on how it impacts real outcomes.

🚨

Platform level safety & policy enforcement

Partnering with legal and ML teams to define acceptable agent behavior, prevent harmful outputs, and support responsible AI usage in real-world environments.

Reflections

What I learned

✨ What worked

Co-designing APIs with engineers to mirror the user’s mental model was key to making the experience intuitive, scalable, and resilient.

Vision storyboarding helped shift the team from backend-driven abstractions to outcome-driven design, creating shared clarity before committing to architecture.

🪄 What I'd do differently

Quantitative discovery: We lacked baseline metrics. I would invest 2 weeks upfront in quantitative analysis & baselines settings before launching a new version.

Proactive research: Post-launch iteration was reactive in the beginning. Would implement continuous research earlier.